High-Tech Software House

(5.0)

Depth perception is the core component of modern robotic systems regarding path planning, obstacle avoidance, localization, mapping or more general scene understanding. It is challenging because it requires specialized active sensors like, e.g., Light Detection and Ranging (LiDAR) modules or multiple passive Red Green Blue (RGB) cameras organized in one system to estimate the depth from color images. However, while LiDARs have a lot of advantages, they also suffer from some drawbacks, i.e., they can be too expensive for amateur robotics enthusiasts and are ineffective in challenging environmental conditions (e.g., fog, dust, or rain). On the other hand, estimating the depth using multiple RGB cameras holds the drawbacks of these sensors, e.g., it requires a tracking system of some key points in images, and they cannot be registered on plain textures. Hence, depth estimation would break on such surfaces.

But what if we could overcome problems with RGB cameras while maintaining affordable prices below the LiDAR level? The answer is RGBD camera, where the D stands for depth. It is a type of sensor that can utilize multiple techniques to estimate the depth, like mentioned stereo vision and structured light or could work in a way LiDARs work - using the time of flight of the emitted light beam. All that is affordable, increasing the accessibility of robotics sensors for all robotics enthusiasts, not only professionals. The following post will explain depth cameras, what technology they use to estimate the depth and how we can use them in robotics, namely speaking in Visual Simultaneous Localization and Mapping (VSLAM) (see our post about SLAM). Additionally, we demonstrate such a SLAM algorithm based on RGBD data in a real-world example in our office in Poznań.

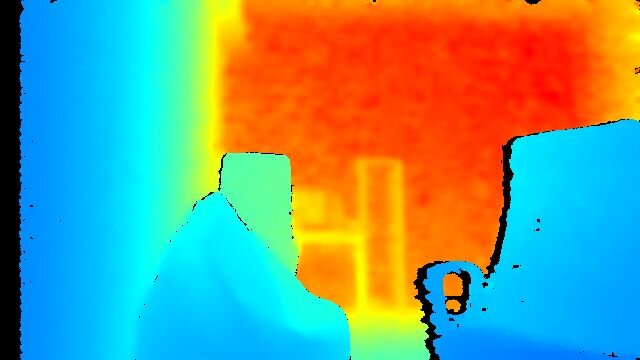

A depth map represents the distance between a scene and the camera that captured it in the form of an image, where each pixel has an associated value of how far it lies in the 3D space. Depth maps are typically used in robotics and computer vision for autonomous navigation and related tasks, like object detection or segmentation. As mentioned, we can create them using specialized hardware such as depth sensors or estimates from multiple images. Often, depth images are represented as a single channel grayscale image, where every pixel represents the object's distance to a camera, and the most popular format is a 16-bit unsigned integer. However, it is also possible to visualize a depth map using color maps where different colors represent different distances from the camera, e.g., close objects are bluer, while the background goes towards red. Using color to represent depth can make it easier to interpret the depth map, especially when working with complex 3D scenes. When dealing with depth images, it's essential to recognize a proper numerical format in which pixels are encoded because it affects our perception of distance - we have to know which pixel values represent what distance. The picture below presents an example of the depth map represented as a grayscale image with distances encoded in a colormap.

Stereovision

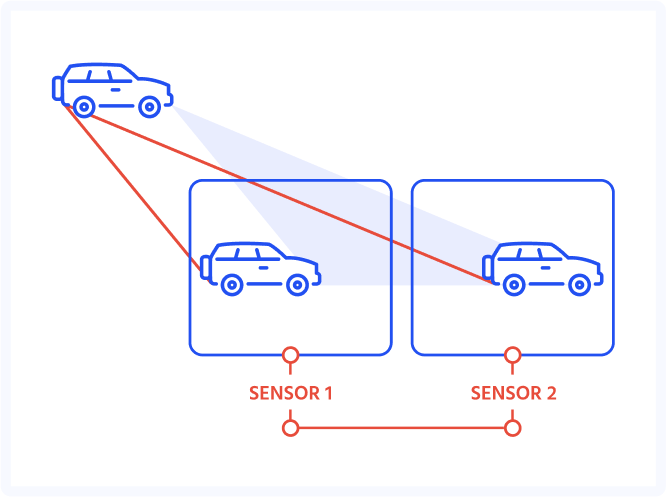

Probably the most natural method that allows for depth perception is the one utilizing two "eyes" sharing a field of view - stereovision. It derives from how the human brain estimates the distance to objects based on views from our eyes. In robotics, a stereovision system consists of two cameras spaced with a known distance between them called a baseline. We can calculate a disparity between the same features localized in both images in such a setup. A technique for depth estimation using multiple cameras is triangulation.

Moreover, stereovision can also use an active approach, with an Infrared (IR) projector, to enhance a system with more visible texture features, which helps with texture-less surfaces like walls. Since the pattern is projected in the infrared spectrum, it's not visible to the human eye. It's worth mentioning that this method indirectly measures the distance and can be used with multiple cameras since the measurement does not affect others. Generally, a stereovision might be a good choice when working in an outdoor environment with rich natural or artificial texture. Its performance depends on the characteristics of the optics and baseline distance.

Examples of devices: Intel RealSense D400 family, Nerian SceneScan Pro

Structured light

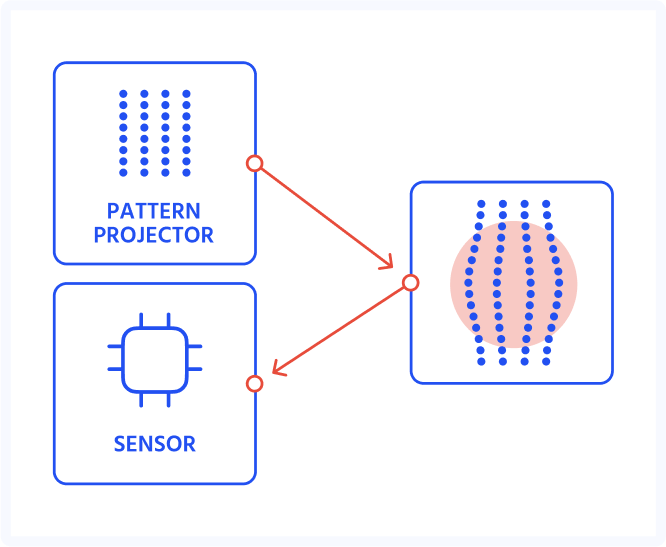

Another way to obtain a depth estimate is a method known as structured light. It uses a predefined (usually) infrared pattern emitted by a projector that is then perceived by a camera. The depth is calculated based on differences between a deformed pattern on surfaces and a reference pattern. This method is dedicated to indoor applications due to vulnerability to sun IR light and also suffering from interference with, e.g., other structured light cameras. SL solutions are active sensors requiring more power than stereovision systems.

Examples of devices: Kinect v1, Primesense Carmine

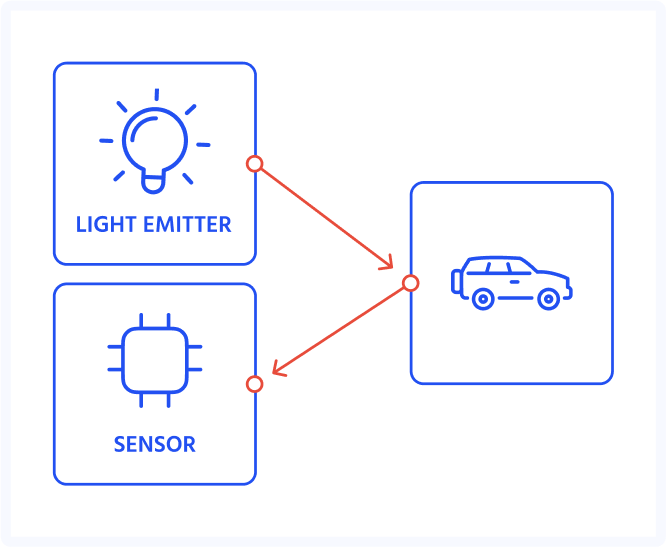

Time of Flight

The last method analyzed in the blog is a time of flight depth measurement. Distance between a camera and an object directly relates to the time between emitting a beam and its comeback because the speed of light is constant. The depth is calculated separately for every pixel of a sensor matrix. Similar to structured light, this approach is prone to noise from strong sunlight when operating outdoors and other devices emitting IR light. On the other side, they perform well in low light conditions and usually have better accuracy and working distance.

Examples of devices: Kinect v2, flexx2

Our sensor - RealSense D435

In Flyps, we used an Intel RealSense D435 in an AMR/AGV robot project. We used depth information and an RGB to position a robot in a predefined place relatively. This camera collects depth images in resolutions up to 1280 x 720 and a frame rate of up to 90 fps. RGB images are in resolutions up to 1920 x 1080 and have a frame rate of 30 fps. The depth sensor uses a global shutter, while the RGB sensor captures images with a rolling shutter. The camera uses an active stereo vision approach. The following figure shows the IR pattern viewed in the camera.

Demo in our office (RTAB-Map SLAM algorithm)

RGBD cameras are useful in robotics. One of the prominent examples of usage is visual simultaneous localization and mapping. In the example below we used a RTAB-Map SLAM algorithm, to generate a map of a room in our office.

You can reproduce our results using data from our office stored in rosbag or run an algorithm with a handheld camera. Here’s a quick tutorial on how to run a RTAB-Map SLAM with Intel Realsense D435 on your machine. You can use a standalone version of a package on Ubuntu, iOS or Windows, but we’ll focus on a ROS package. We assume that you’ve got ROS Noetic Ninjemys installed, along with a realsense driver. If not, follow the instructions here and here.

To install a RTAB-Map package, use the following command:

When installation is complete, you can run a ROS node, which launch D435 camera:

Or you can start our rosbag with command:

Next just start a rtabmap.launch from rtabmap_ros package:

We hope you found this article interesting. Our engineers and experts face the challenges posed by the search for the most effective use of high-tech solutions in business every day.

If you would like to talk about how we can help your business and solutions (not only related to computer vision, autonomous vehicles and robotics) do not hesitate to contact us! You can do it via the form or directly via email [email protected]. Together we will find the most effective and optimal solution that will scale your business.

See our tech insights

Like our satisfied customers from many industries